- PAI - Personal AI - Personal Artificial Intelligence

- Posts

- Path to production for LLM agents

Path to production for LLM agents

production agents

are these medium articles viewable to non subscribers?

Outline

· Outline

· Why agents and what is planning, reasoning, actions and observations.

· How will we attempt to solve it?

· Taking inspiration from some alternate architectural pattens..

· Implementation design patterns and frameworks deep-dive

∘ LangGraph

∘ Let’s dive into how to combine ReWOO style modular planning and action taking with RAG architectures to build something sensible.

· References

Why agents and what is planning, reasoning, actions and observations.

In popular LLM based Agents patterns like ReAct, reasoning capabilities are blended with Knowledge Retrieval and Action Execution, in a closed loop, which needs large amount of redundant tokens to be generated without any control over intermediate actions and end results. Also, it is a nighmare to monitor and debug.

This makes LLM powered Agents tough to be deployed in real-world production apps and they end up as a gimmicky demo tools. So how do we go beyond linear RAG patterns and fulfill the promise of Agentic RAG over-sold in every LLM related blog out there? And no, reasoning loops are not the solution!

LLMs are not inherently good at planning. The tokens generated while generating plans seem “human-like” and deceive humans to believe that LLMs are more than token generators. To achieve successful planning and action prediction, we need to use custom models and a unique way of increasing the LLMs capability to generate valid plans through PDDL (Planning Domain Definition Language), for e.g. see this.

Even when you have the capability to reason and plan, based on well guarded representations, fine-tuning and in-context learning, the Action Taking is step is still complicated and fails more than a production system would like, see this Function Calling from OpenAI based on GPT4.

And at last, they are terrible to monitor and debug at scale. Don’t even talk about hallucinations and costs.

So how would you go about building Co-Pilots and Assistants?.. Complex engineering and a lot of hard work! And that is what this article is about.

How will we attempt to solve it?

Before the explosion of ChatGPT, the world of Conversational AI and Agent Assist systems were developing pipeline driven systems like Haystack and a lot of custom engineering to run Retrieval and Ranking pipelines to feed content from Knowledge Bases to Dialogue Management systems. Systems were designed around hand-crafted “Intents and Entities”, where language models based extraction systems teamed up with Workflows to navigate the user to the desired path. This all worked well (?) in controlled scenarios. But suddenly, the whole world wanted free flowing conversations, even when they needed to solve simple login related issues.

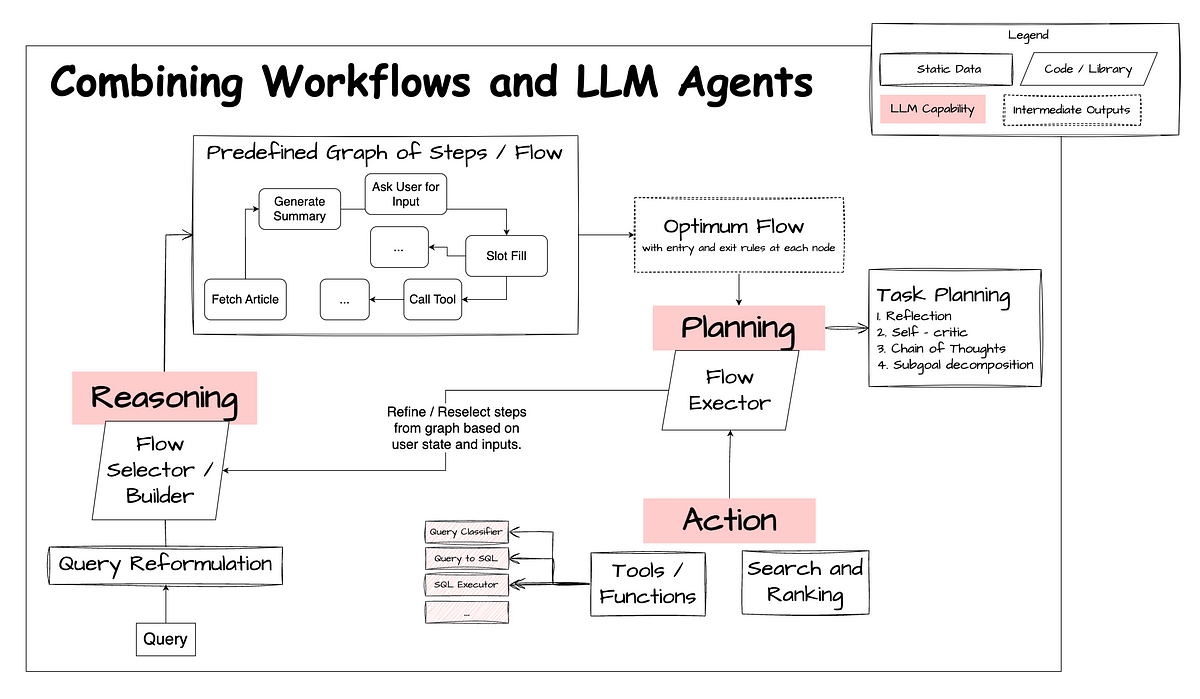

We need to find a balance between the two worlds, where we do not sacrifice on the expressive and natural conversational power of LLMs but also build systems which solve specific problems reliably well. We need to combine reasoning, planning and action taking with some grounding and or fine-tuning to be able to solve it at scale.

In this first article we will read about some architectural patterns on how to do reasoning, planning and action in a cost effective and reliable manner. We will then look at how to implement this in production using systems like Haystack, Langgraph, DSPy, Autogen etc..

In the end, we will brainstorm some architectures on how to deploy a micro-service oriented implementation of the eco-system.

Planning and Reasoning with a workflow in mind.

Taking inspiration from some alternate architectural pattens..

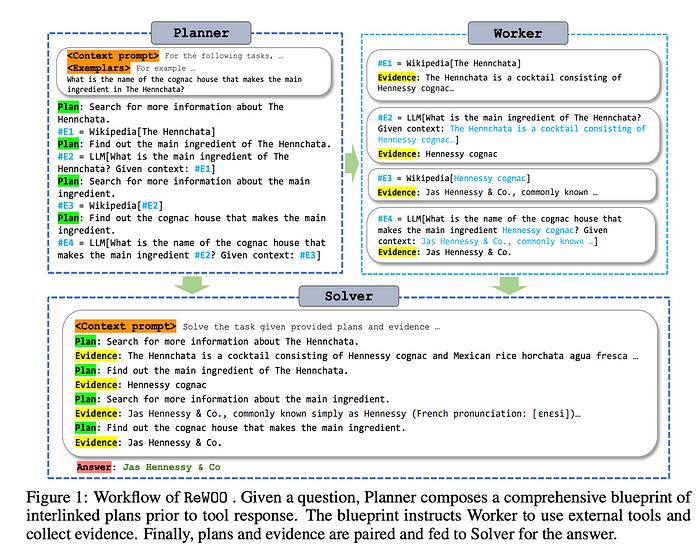

Some notable research papers, supporting this thought process emerged from the team at Microsoft, called ReWOO (also the creators of Orca) who claim that breaking reasoning, planning and action taking into different components resulted in 65% less tokens (i.e. cost of using OpenAI API!) and accuracy gains of 4–5% in terms of generated answer.

More interesting were the observations around behavior when Tool usage fails, and the ReAct system continues token generation whereas ReWOO minimises tool mi-use and does not generate incorrect outputs. Something which is promising for real-world scenarios.

Hence, a system broken down into Planner, Solver, Worker etc is more robust and less costly.

A langchain/langgraph notebook for this can be found here. The key principle is, instead of looping through Plan->Tool Usage->Observation->Reasoning->Plan, we simply generate a list of valid plans in one call, get the observations from the Tools and generate the final answer in one call.

So is it problem solved? We just use 3 different calls to LLMs and solve the problem? Yes, and no, we need to make sure the prompts, model selection and in context examples are appropriate for the given use-case.

Implementation design patterns and frameworks deep-dive

Note: Although AWS Step Functions seems promising in scenarios where production grade infra is needed and other AWS services are also used within the enterprise (like Bedrock, OpenSearch, Kendra etc), we will use open source libraries and abstractions over them to discuss a probable design.

LangGraph

The langgraph implementation of ReWOO implements the Planner (Re), Worker (Act) and the Solver — https://github.com/langchain-ai/langgraph/blob/main/examples/rewoo/rewoo.ipynb

Planner

The planner generates possible strategies of execution of tools and other steps, which will optimally solve the problem. These are fed to the worker for execution and evidence collection.

import re

from langchain_core.prompts import ChatPromptTemplate

# Regex to match expressions of the form E#... = ...[...]

regex_pattern = r"Plan:\s*(.+)\s*(#E\d+)\s*=\s*(\w+)\s*\[([^\]]+)\]"

prompt_template = ChatPromptTemplate.from_messages([("user", prompt)])

planner = prompt_template | model

def get_plan(state: ReWOO):

task = state["task"]

result = planner.invoke({"task": task})

# Find all matches in the sample text

matches = re.findall(regex_pattern, result.content)

return {"steps": matches, "plan_string": result.content}Worker

Several functions which executes tasks, nothing special here.

def _get_current_task(state: ReWOO):

if state["results"] is None:

return 1

if len(state["results"]) == len(state["steps"]):

return None

else:

return len(state["results"]) + 1

def tool_execution(state: ReWOO):

"""Worker node that executes the tools of a given plan."""

_step = _get_current_task(state)

_, step_name, tool, tool_input = state["steps"][_step - 1]

_results = state["results"] or {}

for k, v in _results.items():

tool_input = tool_input.replace(k, v)

if tool == "Google":

result = search.invoke(tool_input)

elif tool == "LLM":

result = model.invoke(tool_input)

else:

raise ValueError

_results[step_name] = str(result)

return {"results": _results}Solver

With the results of the workers, the plan generated by the Planner, the Solver generates the final answer.

solve_prompt = """Solve the following task or problem. To solve the problem, we have made step-by-step Plan and \

retrieved corresponding Evidence to each Plan. Use them with caution since long evidence might \

contain irrelevant information.

{plan}

Now solve the question or task according to provided Evidence above. Respond with the answer

directly with no extra words.

Task: {task}

Response:"""

def solve(state: ReWOO):

plan = ""

for _plan, step_name, tool, tool_input in state["steps"]:

_results = state["results"] or {}

for k, v in _results.items():

tool_input = tool_input.replace(k, v)

step_name = step_name.replace(k, v)

plan += f"Plan: {_plan}\n{step_name} = {tool}[{tool_input}]"

prompt = solve_prompt.format(plan=plan, task=state["task"])

result = model.invoke(prompt)

return {"result": result.content}All of the above nodes, built as a graph:

from langgraph.graph import StateGraph, END

graph = StateGraph(ReWOO)

graph.add_node("plan", get_plan)

graph.add_node("tool", tool_execution)

graph.add_node("solve", solve)

graph.add_edge("plan", "tool")

graph.add_edge("solve", END)

graph.add_conditional_edges("tool", _route)

graph.set_entry_point("plan")

app = graph.compile()Let’s dive into how to combine ReWOO style modular planning and action taking with RAG architectures to build something sensible.

…to be added soon

See this for a detailed deep dive on design patterns for agents, where I do a deep dive on coding copilots like GPT Pilot — https://medium.com/@raunakjain_25605/design-patterns-for-compound-ai-systems-copilot-rag-fa911c7a62e0

Reply